Understanding how heat is measured is crucial in numerous fields, from engineering applications to everyday cooking. The joule, a fundamental unit of energy, quantifies the amount of heat transferred. Precise temperature readings, often obtained using a thermocouple, are essential for determining heat flow. Thermodynamics, the science of heat and energy transformations, provides the theoretical framework. And National Institute of Standards and Technology (NIST) sets the standard units of measurement for ensuring accuracy. This article provides a simple guide to the core principles underlying how heat is measured and its practical implications.

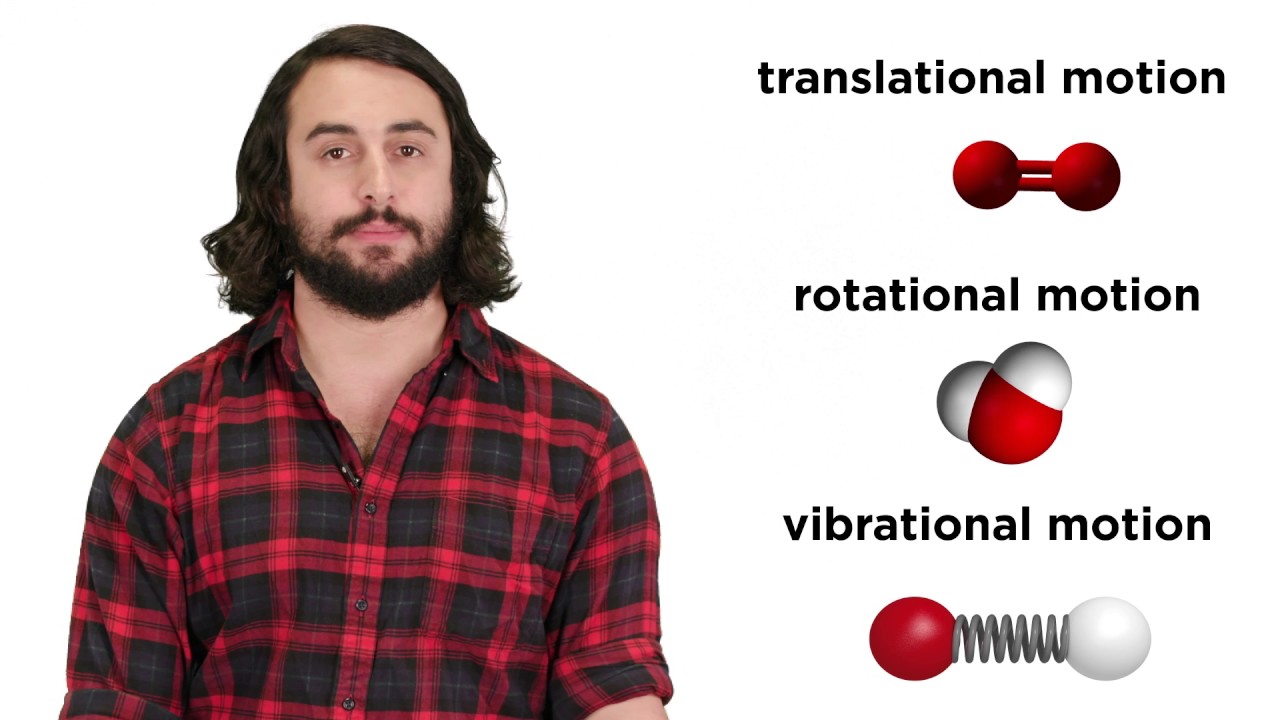

Image taken from the YouTube channel Professor Dave Explains , from the video titled Heat and Temperature .

Unlocking Heat: A Simple Guide to Heat Measurement

This guide provides a clear explanation of how heat is measured, covering the fundamental concepts and practical methods used. It aims to demystify the process of heat measurement for anyone curious about this essential aspect of physics.

Understanding Heat and Temperature

Before diving into how heat is measured, it’s crucial to differentiate between heat and temperature. Often used interchangeably, they represent distinct concepts.

- Heat: Represents the total energy of molecular motion within a substance. It’s an extensive property, meaning it depends on the amount of substance present.

- Temperature: Measures the average kinetic energy of the molecules in a substance. It’s an intensive property, independent of the amount of substance.

Temperature is the indicator of heat, but not heat itself. A bathtub full of lukewarm water has more heat than a cup of boiling water, even though the boiling water has a much higher temperature. This distinction is fundamental to understanding how heat is measured.

Methods for Measuring Heat: Calorimetry

The most direct way of determining how heat is measured is through a process called calorimetry. Calorimetry involves measuring the heat exchanged between a system and its surroundings. A device used for this purpose is called a calorimeter.

Types of Calorimeters

Various types of calorimeters exist, each suited for different applications and levels of precision. Here are a few common ones:

- Simple Calorimeter (Coffee Cup Calorimeter): This is a basic setup, often using a Styrofoam cup as an insulated container. Reactions are performed inside, and the temperature change of the water surrounding the reaction is measured. Its simplicity makes it ideal for introductory experiments.

- Bomb Calorimeter: Designed for measuring the heat released during combustion reactions. A small sample is placed inside a sealed container (the "bomb") filled with oxygen. The bomb is then submerged in water within a larger container, and the temperature increase of the water is carefully measured.

- Differential Scanning Calorimeter (DSC): A more sophisticated instrument used to measure the heat flow associated with physical transitions (e.g., melting, crystallization) and chemical reactions as a function of temperature. It compares the heat required to raise the temperature of a sample and a reference material.

The Principle Behind Calorimetry

Calorimetry relies on the principle of conservation of energy. The heat lost by one substance is equal to the heat gained by another, provided no heat is lost to the surroundings. This is represented mathematically by the following equation:

Q = mcΔT

Where:

Q= Heat transferred (in Joules or Calories)m= Mass of the substance (in grams)c= Specific heat capacity of the substance (amount of heat required to raise the temperature of 1 gram of the substance by 1 degree Celsius or Kelvin)ΔT= Change in temperature (in Celsius or Kelvin)

By accurately measuring m, c, and ΔT, the heat transferred (Q) can be calculated, revealing how heat is measured in a specific process.

Example Calculation

Imagine we heat 100 grams of water from 20°C to 30°C. The specific heat capacity of water is approximately 4.186 J/g°C. Let’s calculate the heat absorbed:

m= 100 gc= 4.186 J/g°CΔT= 30°C – 20°C = 10°C

Q = (100 g) * (4.186 J/g°C) * (10°C) = 4186 J

Therefore, 4186 Joules of heat were required to raise the temperature of the water. This demonstrates a practical application of how heat is measured.

Indirect Heat Measurement

While calorimetry is a direct method, how heat is measured can also involve indirect approaches. These methods often rely on measuring properties that change with temperature, which can then be correlated to heat transfer.

Thermography (Infrared Thermography)

Thermography uses infrared cameras to detect and visualize the infrared radiation emitted by objects. Since the amount of infrared radiation emitted is related to the object’s temperature, thermography can be used to create thermal images (thermograms) showing temperature variations. While it doesn’t directly measure heat, it provides valuable information about heat distribution and potential heat losses or gains. This technique is widely used in building inspections, medical diagnostics, and industrial maintenance.

Heat Flux Sensors

Heat flux sensors directly measure the rate of heat transfer through a surface. These sensors typically consist of a thin thermal resistance material with thermocouples on either side. The temperature difference across the thermal resistance is proportional to the heat flux, which can then be calibrated to provide a quantitative measurement of how heat is measured through a given area per unit time (e.g., Watts per square meter).

Units of Heat Measurement

Understanding how heat is measured also involves knowing the common units used:

| Unit | Symbol | Definition |

|---|---|---|

| Joule | J | The SI unit of energy; the work done when a force of one Newton displaces a body one meter. |

| Calorie | cal | The amount of heat required to raise the temperature of 1 gram of water by 1 degree Celsius. |

| Kilocalorie | kcal | 1000 calories; often referred to as "Calorie" (with a capital C) in nutrition. |

| BTU | BTU | British Thermal Unit; the amount of heat required to raise the temperature of 1 pound of water by 1 degree Fahrenheit. |

Conversions between these units are common, allowing for seamless data interpretation across different fields and applications. It’s important to remember the context and purpose when choosing which unit to use to appropriately describe how heat is measured.

FAQs: Understanding Heat Measurement

Here are some frequently asked questions to help you better understand the basics of heat measurement and how it applies to everyday life.

What’s the difference between heat and temperature?

Temperature measures the average kinetic energy of the molecules in a substance. Heat, on the other hand, is the transfer of energy from one object or system to another due to a temperature difference.

What are the common units for measuring heat?

The most common units are Joules (J) in the metric system and British Thermal Units (BTUs) in the imperial system. These units quantify how much energy is transferred as heat. How heat is measured often depends on the standard unit used for a given application.

What tools are used to measure heat?

Calorimeters are commonly used to measure the amount of heat involved in a chemical or physical process. Thermocouples and thermistors measure temperature changes, which can then be used to calculate heat transfer based on material properties.

Why is understanding heat measurement important?

Accurate heat measurement is critical in many fields, from engineering design to scientific research, and even cooking. Knowing how heat is measured ensures accurate control and efficiency in various applications.

So, there you have it – a peek into the world of how heat is measured! Hope you found it helpful and maybe even a little bit…warm? Until next time, keep exploring!